My old team at Atlassian shared a lot of information about the types of tests you can (and should!) write for large scale software development. But more practical tips about how you can actually develop this testing infrastructure is harder to find, scattered across many different conference presentations and documents.

I was Head of Engineering for Confluence from 2012 to 2016, and during this time we evolved the product rapidly while also dramatically expanding the customers we served across both self-hosted and cloud editions of the product.

Today I wanted to share some of my learnings and specific approaches we used in the Confluence engineering team to successfully develop a broad set of automated tests, despite many competing priorities, and ultimately put them to use to support a highly reliable cloud service with millions of users.

1. Start with acceptance (end-to-end) tests, not unit tests

A lot of automated testing HOWTOs start with unit testing as the foundation of your test infrastructure, but our team found our automated acceptance tests far more valuable in catching regressions and validating the behaviour of the application.

An automated acceptance test for us is a test which exercises the live application through a programmatic web browser. Initially, we used JWebUnit, which had a basic HTML-only processor, but over time this expanded to include Selenium and its later incarnation WebDriver. These would drive a headless Chrome-like browser, which also gives ability to test all your JavaScript code.

We called these acceptance tests or functional tests, but I think more recently they are known as “end-to-end” tests instead.

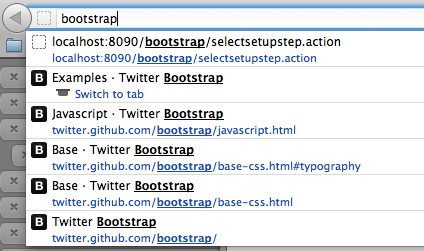

Our acceptance test suite started from the first screen a user sees - the “setup wizard”, stepped through various ways to set up the application, then moved on to a test for each feature in turn. The tests would create pages, demonstrate various forms of content, test the editor, check permissions, exercise admin screens, turn on and off different options.

Occasionally, an earlier test would leave the system in a bad state, resulting in a string of subsequent test failures. These were sometimes tricky to debug, but always turned up critical problems which were important to fix for the stability of the product.

When I started on the team in 2006, we had perhaps 20-30 acceptance test classes, with a few hundred test methods. About 10 years later, we had hundreds of classes with 3000-4000 test methods. These were combined in multiple different suites to exercise the various editions of the product.

We also had a lot of unit tests, but our unit tests turned out to be much less useful than acceptance tests for two reasons:

- Fragile dependencies on core code - our unit tests used mock and stub objects to test interactions between different services and managers. These were not difficult to write, but maintenance was an issue. Most production code changes required also updating the corresponding mock/test objects. This meant a lot of busywork around the test code which didn’t add value in finding new bugs.

- Limited scope of testing - the unit tests were great in some areas, like complex business logic in content permissions, but most of the important bugs in Confluence tended to be interaction between disparate components which were not exposed by unit testing.

These drawbacks of unit tests meant we got much more bang-for-buck from our acceptance (or end-to-end) tests. So that was natually where more of our testing investment went. And that meant we found several ways to make writing these acceptance tests much easier over time.

2. Write new APIs for use with automated tests

To speed up tests and make writing them easier, we added a set of “test APIs” which could take care of common test functionality:

- Resetting the system to a known good state

- Creating some test data for the test scenario

- Triggering scheduled tasks on-demand

- Querying some internal state of the system (e.g. index queue), to make sure behind-the-scenes activities had happened as expected.

To implement the test APIs, we leveraged the dynamic plugin system in our apps, and installed a “func test” plugin at the start of our test run. This prevented accidentally exposing an API to customers with a “delete all data” method, which was actually something we used in our tests to reset the system to a known state.

We built Java wrappers around these APIs so they were easy to call from the test code. That included pretty neat testing features, like the ability for a test to perform some action on the front-end, then wait up to a few seconds to check that the expected behaviour was triggered on the server side.

Automated testing led to us expanding some of the production APIs available to customers as well. For example, we initially had a basic RPC API for creating Confluence pages, which was fairly limited. As we wrote more tests, we needed the ability to test setting a date when creating a blog post. So this was added to the public RPC API in part due to our need to use it for testing.

Building additional test APIs increases the value of time spent on automated testing, as it expands the surface area of your product APIs and also makes writing future tests faster and easier.

The lesson here is to not lock up logic inside your test code, perhaps with a goal of making tests self-contained, but instead build abstractions underneath your tests. These can can be put into test-specific APIs or even customer-facing APIs where appropriate.

3. Write tests as you write features, add tests as you fix bugs

A key aspect of automated testing is making it part of the team’s routine. For our team, there were two key points in our workflow where automated testing was required:

- First, when writing a feature, each developer was required to write automated unit and acceptance tests for that feature. This was included in our team’s “Definition of Done” as a baseline requirement.

- Second, when fixing a bug, we would expect each bug to have a test written that reproduced that bug. So you’d have a red test straight away, and then when the bug was fixed, the new test would pass.

There may have been the occasional exception, but these rules served us well in encouraging everyone to contribute to the automated testing work as we enhanced and fixed bugs in the product.

Having these two activities in our workflow was sufficient to drive the development of thousands of test cases over the decade or so I worked on the product. It’s critical that any team who is serious about automated testing make the development of tests an expected part of all feature development and bug fixing.

4. Peer review the feature, not just the code

The other part of our process that evolved over time was the review process. Like many teams, we used a peer code review process via pull request in Bitbucket (and formerly Crucible), so all code was reviewed prior to merging the code into the main branch. But this wasn’t the important bit.

In addition to code review, we had a stage in our Jira ticket workflow called “quality review”, which was about looking at the actual feature (or bug fix), making sure the feature was working properly. Another developer on the team actually had to try out your feature and make sure it worked properly.

Quality review proved really impactful in ensuring features were developed properly, not rushed out with bugs or obvious issues. It also had a side effect of improving our automated test coverage.

If the quality review developer found some obvious bugs with the feature, they would naturally wonder why the tests for the feature hadn’t caught the issue. And in this way, there was a second forcing function (in addition to the “Definition of Done” mentioned above) to catch inadequate automated tests prior to completing a feature.

Having a peer review process for feature quality (in addition to code quality), proved to be really valuable, not only for delivering higher quality features but also for identifying gaps in our acceptance test coverage.

5. Track then fix or remove flaky tests

The bane of any automated testing process is flaky tests. These waste developer time and reduce trust in the overall testing system.

Dealing with flaky tests requires three behaviours:

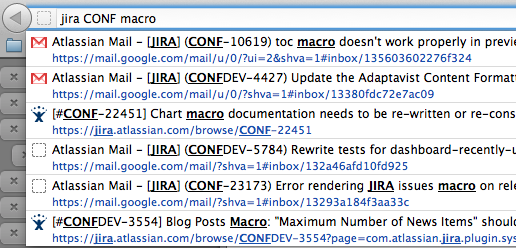

- Track them. Each flaky test should have a Jira ticket, and every time there is a build failure, you should pop a comment on the ticket. This makes it possible to track and prioritise flaky test failures based on how frequent they are.

- Fix them. Each iteration, schedule time to fix flaky tests or other painful aspects of your build system. Every team needs to allocate time to “sharpen the saw”, without which your development speed will eventually grind to a halt.

- Delete them. If, after spending a bit of time on a test, you cannot make it 100% reliable, then delete it. A flaky test is worse overall than some minor bugs in your product. You can always write a new test if you really need to, hopefully with less flakiness the second time around.

When fixing flaky tests, it is useful to apply tip #2 above, and aim to build APIs that systematically reduce flakiness. Often flakiness is due to timing issues. A test makes a change to the system, then the assertion which follows afterwards fails if the change takes too long. Or it fails if the change occurs too quickly.

In these cases, building APIs that allow the test code to reliably check for the change to the system, regardless of its timing, can fix flakiness not just in one test, but in all subsequent tests which do the same thing.

In my experience, the best solutions to flaky tests is a new test API, which can then be used by future test cases to avoid the same timing issues.

Putting your tests to use

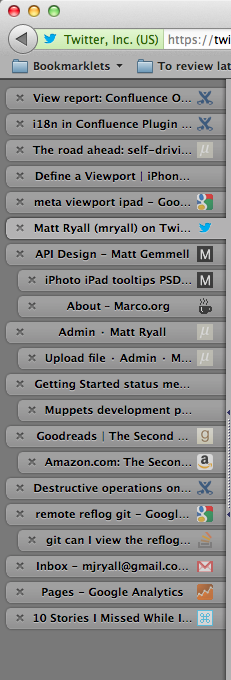

Once you have a suite of reliable automated tests for your application, you will find many ways to put them to use. We used them in all these ways:

- Developers run them locally to get feedback on their changes

- CI runs them on development branches so devs get feedback before review

- Release branches run a more comprehensive set, with some upgrade tests, to check all is good before we ship a self-hosted release

- For cloud deployment, we ran a subset of “smoke tests” against “canary” instances to check that there was nothing amiss after an upgrade

- We used them to create demo data for the marketing team

- We converted some of them into performance tests so we could baseline and track our application performance over time.

The sky is really the limit once you have spent the time to automate the testing of your code.

Summary

So let’s wrap up the practical suggestions for expanding automated testing in your team:

- Start with acceptance (end-to-end) tests, not unit tests

- Write new APIs for use with automated tests

- Write tests as you write features, add tests as you fix bugs

- Peer review the feature, not just the code

- Track then fix or remove flaky tests

If you have any questions about testing processes, or how to get your team writing more automated tests, please get in touch.